Just like cinema in its early decades, video games have always been driven on by a breakneck pace of technological progress. As with other software, the history of games has been tied firmly to the history of the computing platforms on which games are developed and played; just as the first computers were big enough to fill rooms, many early games like 1971’s legendary Computer Space had to be immobile coin-ops due to the primitive nature and size of the hardware on which they ran.

At different stages of gaming’s history, technological limitations have imposed different conditions on game development. One of the most significant of these limitations has been extremely restrictive memory storage. This is a brief history of a long-used solution to that problem, which while brought on by necessity may have made a comeback recently because of its fascinating side effects.

Games have been getting consistently bigger in file size and usage of data for decades. Today, many dozens if not hundreds of files can make up the appearance of any given event or happening in our game worlds. As detail of textures, models and effects has almost exponentially increased, so too has the space required on floppies, discs, hard drives and system memory to store and process the components of the myriad wonders our games have brought us. Most of us are now used to games in which all the levels have been pre-built by the game’s developers, so that we all play through mostly the same experiences – we can discuss specific levels, and how we each defeated specific challenges in our way. But as some remember all too well, this was not always the case. Well into the 1980s, game systems could not store enough information in their memory to make these tapestries of levels technically possible. The solution: procedural generation.

It worked as follows: each time a level was loaded, it was being constructed on demand by the system within specific predefined parameters that would help ensure that the level, built using algorithms and pseudo-random number generators, was actually playable. Procedural generation allowed games to increase their length and complexity beyond the likes of Computer Space and Pong; games could have many levels, but those levels did not need to be locally stored at any point.

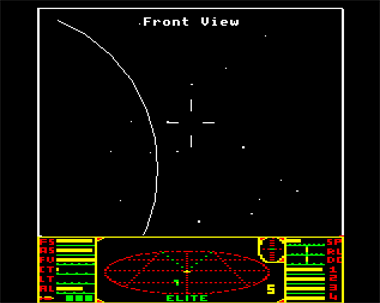

Elite’s use of procedural generation made it feel the game held infinite space.

By the 80s, hugely ambitious games were being created using procedural generation. Maybe the most famous of these was David Braben and Ian Bell’s celebrated Elite, a space trading game released in 1984 which featured some eight galaxies, each containing 256 planets with accompanying space stations, all waiting to be explored and all made possible by the game’s algorithms.

In this era, procedural generation became a common feature of many types of games. 1982’s River Raid used it to place its enemies and objects; 1985’s Rescue on Fractalus used it to depict a dynamically mountainous alien world; and 1988’s Exile used it to present the adventures of its space-travelling hero.

In the 90s, however, procedural generation began to lose its importance as large and sophisticated worlds were within the realms of creation and, more importantly, storage. Games increasingly became more work-intensive for developers, as all of their levels had to be made by hand, but the pay-off was that gamers had more believable – if more predictable – worlds to explore. Some games continued to use algorithmic terrain creation such as the early games in the Elder Scrolls series, yet by the time The Elder Scrolls III: Morrowind was released in 2002, procedural generation and pseudorandom game content in general was definitely the exception rather than the rule. 2000’s Diablo II represents a relatively rare example of a game from this modern era which used this forgotten design.

Although forced upon developers because of the specific circumstances to do with computer technology at the time, procedural generation has always had major advantages. When entering an area constructed by procedural generation, you don’t know what to expect, and depending on the genre of the game, the experience can be wildly different between one player and the next. Today people talk about watercooler moments, particularly gripping set pieces discussed by players of a game as shared experiences. Games like Half-Life have relied heavily upon these scripted, memorable and, crucially, repeatable moments. In contrast to this though, procedural generation offers divergent experiences to discuss. It makes a player’s experience of a game more specific to them alone, more individual. It also allows for a great deal more content than pre-built worlds can allow. The thousands of planets in Elite would have taken an enormous amount of development time to craft by hand, despite the fact that Elite was developed in an time when graphics were lacking in detail.

Today, games are enormously work intensive because their environments are built by hand and gamers expect their games to be increasingly realistic, for them to feature suitably sophisticated graphics, physics, and sound design, any of which can conflict with the central tenets of procedural generation. This ever increasing workload is one of the reasons why procedural generation may yet have a future in gaming. If developers could mitigate or overcome the design mechanic’s shortcomings, such as occasionally repetitive or boring environments, it could help to make gaming experience more individualised again while combating the ever-increasingly long development cycles of modern games – or at least make that time more worthwhile by offering almost endless, randomly generated experiences in the vein of Elite, except now by using today’s more sophisticated aesthetics and technology.

The ill-fated Hellgate: London could’ve been the game to bring back procedural generation.

In recent years, some fascinating experiments have been undertaken using these principles. Two level making programs for Doom and Doom II called SLIGE and OBLIGE have demonstrated that playable and surprisingly pleasing levels can be created pseudorandomly for first-person shooters, albeit ones which relatively simple sector-based level design and an engine which does not allow rooms to be placed on top of other rooms. Whilst otherwise pretty much an abject failure, Hellgate: London managed to successfully implement some randomised features into its gameplay in a similar fashion to the Diablo games, as recently as 2007.

Clearly, procedural generation can be used in modern games in a way that amplifies their variety and appeal, but the work in this field isn’t yet moving quickly enough. We are in an era when games are so work-intensive in their aesthetics that many games are getting shorter and shorter, especially in genres where procedural generation has rarely, if ever, been attempted. The first-person shooter is the classic example. Half-Life, a triumph of scripting and intricately premade levels, brought into being the current modern incarnation of its genre, an incarnation which led to more detailed and work-intensive games, but often shorter ones too. Valve was one of the only FPS developers who resisted this shortening of games; whilst both Half-Life and Half-Life 2 were impressively long, many shooters today are worryingly short, a shortcoming which is tempered with fig leaf words like cinematic. Call of Duty: Modern Warfare was one such example. If the likes of OBLIGE were applied to Modern Warfare then admittedly you’d get a very tiresome and predictable procedurally generated game, but that’s because research and effort into procedural generation has not kept pace with the sophistication of other areas of games technology and design. OBLIGE was made by a small team with no funding. If more investment was put into similar ideas then that could translate into a technology which could actually enhance, rather than merely prettify, our gaming experience.

Procedural generation; pseudorandom number generators – they have a future in gaming. Their implementation in modern, complex games is less simple than the gradual process of improving graphics and physics, but they have a potential to impact far more than that. They represent a technology that has mostly been left behind, and that admittedly has shortcomings, but which could also have a profound ability to make games more exciting, unpredictable and individualised. Procedural generation isn’t for every type of game, or for every type of gamer, but its versatility may be greater than has previously been realised and in this era when gamers increasingly wonder what the limits and potentials of gaming are, we should be exploring every possible innovation – even if it is tempting to see one as being a relic of the past.

They say that, separately from other cultures, the Aztecs invented the wheel, but that they then grew bored of it and didn’t think it useful any more. They built, at the time, the largest city on Earth – without using it. Can you imagine what they might have achieved if they had not neglected their invention? The comparison doesn’t work all the way; the Aztecs may have been subjugated by wheel-using Europeans, but gaming’s survival isn’t really at stake. But this is still a crucial time. Gamers want to be taken seriously – let’s hope the industry uses all the best tools at its disposal, and not just the shiniest ones.

“